2026’s New Year’s resolution: Maintain balance and stay in control

by Michael Helwig

Predictions are fun, but they rarely turn out the way we expect. So, rather than speculating about what might happen, let’s examine what shaped AppSec and the Cybersecurity industry in 2025 and what is likely to keep us busy in 2026. This is my personal point of view, which is strongly influenced by my focus on application security and the associated compliance challenges.

Architecture Complexity: Cloud, APIs, LLMs and Agents

The complexity of modern application environments increased again in 2025. With cloud-native services, expanding API ecosystems and AI embedded in everything from internal tools to customer-facing systems, it has become increasingly challenging to keep pace. We try to develop a better understanding of worthwhile use cases and AI integrations while we continue to explore the ‚alien tool‘ (Andrej Karpathy) of LLM technology, still wondering whether Prompt Injection is a feature or a vulnerability. But autonomous agents and the MCP stack are already introducing additional layers of automation and security concerns. Developers, with the help of AI, are moving fast and occasionally break things. Unfortunately, security practices have not fully adapted and remain an afterthought. Efforts to retrofit ’security by design‘ into these fast-moving environments are ongoing. Managing security across multi-cloud and API-centric architectures, with the added complexity of AI, and the access to backend systems and large datasets it provides, is a significant challenge, even for well known market players. In 2026, we can expect this complexity to persist. Perhaps focusing on better visibility, not blindly trusting every new technology and improving integration and communication between architecture and security will help tackle it, until tools improve and security processes mature. Also we should not forget to keep in mind all our basic security practices (after all, AI is just software, isn’t it?) and the good guidance that is already available from different sources. So what can possibly go wrong?

Regulatory pressure in the EU (CRA, NIS-2 and the AI Act)

Throughout 2025, regulatory momentum continued to build, particularly within the EU. The NIS-2 Directive, in effect since 2024, introduced stricter cybersecurity governance requirements for essential sectors finally also in Germany, while the Cyber Resilience Act (CRA) set out baseline security expectations for digital products. NIS2 targets a significant number of organizations in EU and Germany deemed relevant in terms of size or sector (plus special ‚essential‘ cases). The CRA is a product regulation that will have a severe impact on a wide range of products with digital elements on the EU market, including IoT devices, desktop and mobile apps. It will also affect open-source projects with a commercial background, although „pure“““ open-source projects remain exempt. Meanwhile, the EU AI Act, which is set to come into full effect in 2026, will impose significant obligations on providers and deployers of high-risk AI systems (and transparency obligations on others). Application and product security are central to all of these regulations. They can be used as business cases to advocate for better application security, but AppSec teams also need to take compliance with these regulations on their roadmap.

Continued Supply Chain Risks

Open-source supply chain issues are nothing new, but the sophistication of the impact and maturity of the attacks are increasing. Automated, AI-driven attacks, such as Shai Hulud, provide an indication of what might be to come. By November 2025, Shai-Hulud 2.0 had compromised 796 NPM packages (with over 20 million weekly downloads) in order to steal credentials from developers and CI/CD environments. Shortly afterwards, a critical vulnerability in the React framework, dubbed ‚React2Shell‚ was exploited on a large scale by both opportunistic cybercriminals and state-linked espionage groups within days of its disclosure. Although the adoption of SBOMs and improved dependency controls has increased and tools are readily available, the overall threat level remains high. As an industry, we have started to adopt processes and tools to mitigate the increased risks, but we are far from having them under control.

Developer Velocity vs. Security Debt (or: Vibe Coding)

In 2025, AI tools are used daily by nearly 50% of developer to increase productivity (- maybe not in every scenario, and with a bit of decreasing confidence in the tools). The definition of ‚developer productivity‘ remains vague but AI now generates 41% of code or even more. Vibe coding, whereby developers use AI prompts to iterate code until something functional emerges, becomes more common. However, this workflow often prioritises functionality over security, leading to vulnerabilities or insecure defaults being introduced. The number of CVEs surpassed 48,000 in 2025, a 20% increase from the year before, indicating that software security quality remains a systemic challenge. Developers may accept code suggestions without fully understanding them, resulting in inadequate oversight of the actual functionality of the code and a ‚shaky foundations‘, as even Cursor’s CEO warned. Therefore, despite AI being deployed for vulnerability detection and automated code fixes (potentially introducing new issues), developer training and the establishment of a sustainable security culture remain essential (excluding, perhaps, phishing training). In terms of development culture, security should enable, rather than control, but it must keep up with the increased development speed through scaling, automation, and prioritization.

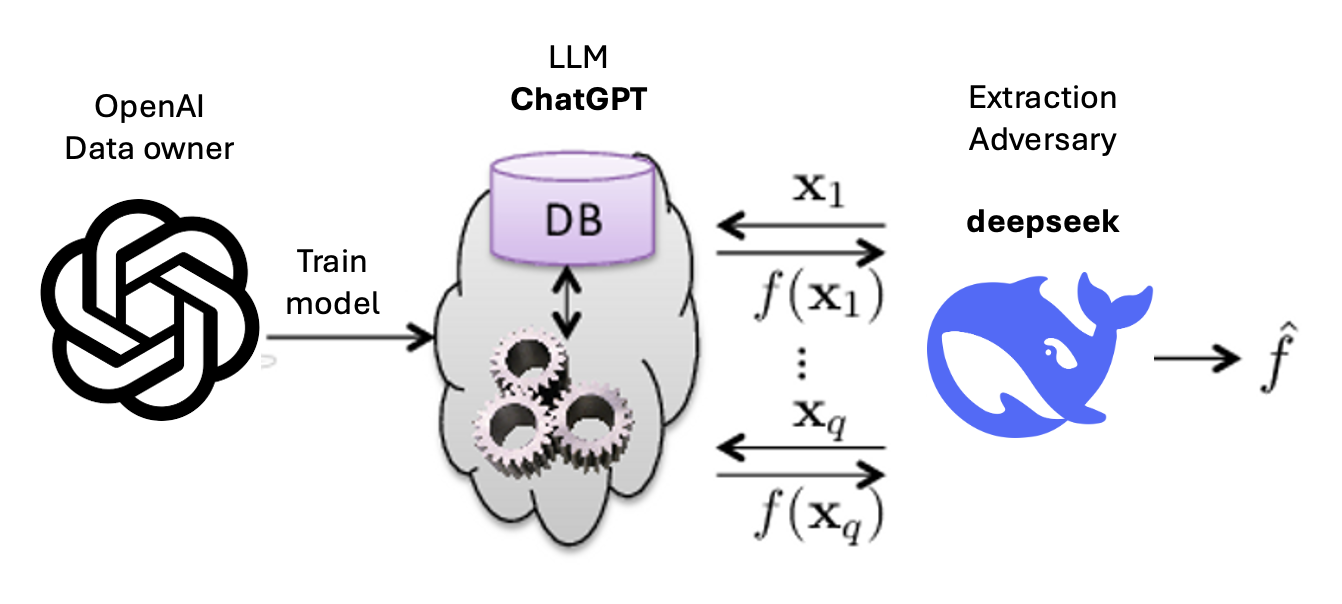

AI changing both attack and defense

AI continues to be integrated into security processes. Workflow automation tools are entering the security space, but AppSec SOAR and ASPM has yet to be established. AI oriented use cases such as automatic fixes for vulnerabilities, ticket enrichment and AI-supported vulnerability triage, as well as support for manual processes such as threat modelling, are continuously being explored. AI-assisted systems aim to help teams prioritize vulnerabilities based on real exploit risk, correlate code and runtime data for richer context and filter out false positives to reduce alert fatigue. However, AI assisted software vulnerability management tools that live up to the high expectations have not yet fully arrived. Automated penetration testing tools are improving, but attackers are also using AI as a weapon. More sophisticated phishing campaigns continue to erode user trust and prompt changes to IAM mechanisms (MFA, of course, and Passkeys). Threat actors have weaponized AI to scale up their campaigns, using generative AI to automate malware development, produce convincing phishing lures and generate increasingly convincing deepfake content for social engineering attacks. This ‚AI augmentation‘ of attacks enables even less-skilled adversaries to carry out sophisticated operations by letting AI handle the heavy lifting, from writing exploit code to solving problems on the fly. The time it takes attackers to exploit vulnerabilities before patches are available has shortened once again, falling into the negative at -1 day.

What’s next?

So, amidst all these slightly unpredictable and rapidly changing events, what’s next? I suppose, it will be a continuation and intensification of what we’ve already seen. Organizations have to manage ever-growing volumes of security-relevant data, including architectural diagrams, threat models, cloud configurations, runtime telemetry, compliance artifacts and AI outputs. At the same time, AI is beginning to actively and independently steer processes, even though we do not yet fully understand the risks involved. Opportunity lies in integrating knowledge silos more effectively to provide a clearer view and analysis of relevant risks to focus on. The common thread — and threat — is, however, complexity: in terms of technology, regulation and adversaries. We must all navigate an environment in which complex, rapidly changing architectures and technologies require constant attention. We must avoid failure while moving forward at an ever-increasing speed. Let’s work together to maintain balance and stay in control.