Is it all just stolen? – DeepSeek and Model Theft

Written by Benjamin Altmiks

ChatGPT has long been integrated into the everyday working lives of most computer users – far beyond the tech industry. But despite its success, key criticisms remain, first and foremost the enormous consumption of resources.

The ‘Neue Zürcher Zeitung’ reported that a single ChatGPT query consumes up to 30 times more power than a regular Google search.

A new language model that requires fewer chips thanks to more efficient calculations, thereby reducing energy consumption, would therefore undoubtedly be a significant step forward. The reasoning behind DeepSeek is based on precisely this: a more resource-efficient algorithm basis as a purely technical improvement.

But what if this supposed breakthrough is not so innovative after all – but has largely been imitated or even copied?

It is precisely this suspicion that is now being raised – by OpenAI itself. As the Financial Times reports, the company claims to have evidence that China’s DeepSeek has used its model to train its own AI competitor.

But how exactly should this be possible? The accusation revolves around Model Theft – the theft of an already trained machine learning model. This threat is not new: back in 2023, OWASP categorized it as one of the Top 10 Risks for Large Language Models (LLMs) as well as one of the Top 10 Machine Learning Risks.

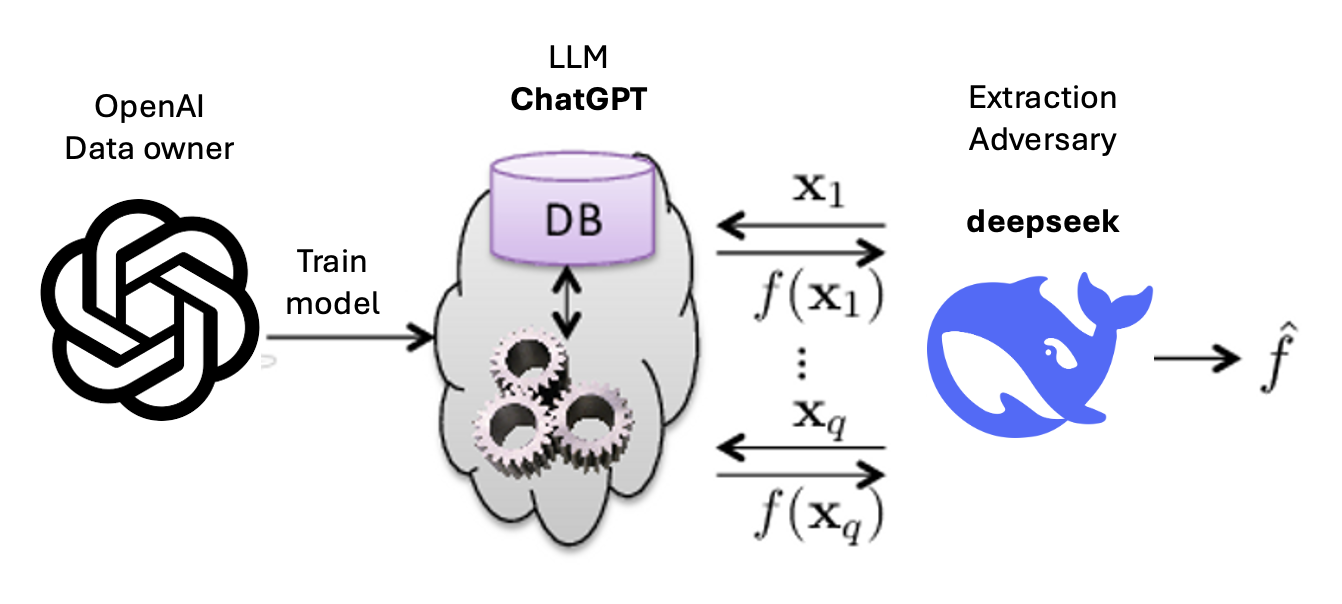

The concept itself is as simple as it is effective: a huge number of targeted queries are sent to an existing model – in this case ChatGPT. The responses generated then serve as training data for a new model that builds on this knowledge. This is how DeepSeek is said to have gained a decisive advantage – as the following graphic illustrates:

The following figure is based on Tramèr et al. (2016) but has been adapted and extended.

In the graphic shown, OpenAI is labelled as the data owner, although ChatGPT was largely trained on publicly accessible data. This raises a fundamental ethical question: shouldn’t results generated from public data also be freely available and usable for the general public? At the same time, however, it should not be forgotten that OpenAI has invested considerable resources – both in the form of data preparation and classification as well as financial investment – in the development of the model. This work must be protected, which is why it would not be acceptable if DeepSeek published a model based entirely on ChatGPT.

However, only the company itself knows what data DeepSeek ultimately used for the training. Regardless of this, the technical approach remains both impressive and worrying. Even if the model is merely a successful replica of ChatGPT, this represents a considerable technological breakthrough – after all, no other company has yet succeeded in developing a competitive model of comparable quality on this basis.

Companies that train their own language models with internal company data will need to increase their awareness in future. This is because these models could also potentially be copied and misused by other companies. Whether it is Microsoft, whose market strategy needs to be better predicted, or a medium-sized company whose internal processes and strategic decisions could be reconstructed using such models – language models have to be considered as valuable assets and protected accordingly.

At secureIO, we show you how language models and other machine learning systems can be strategically secured for the long term. Book a webinar, presentation, or a practical training course directly at your company now.